As you probably also know by now there is a fully open source

ASIC toolchain called OpenLANE + a 130nm PDK from SkyWater Foundries together with a program called OpenMPW which allows

anyone to produce ASICs from their open source RTL designs completely for free.

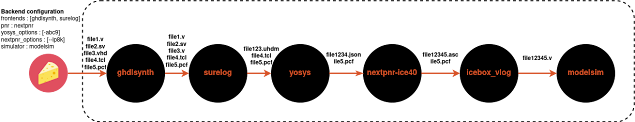

And regular readers probably also know that NLNet Foundation has kindly sponsored an Edalize backend for this toolchain, so that users can easily run their designs through this toolchain just as they would do with a simulation environment or an FPGA implementation.

But wouldn't it be great if there also was a good example design to show how this is actually accomplished? And wouldn't it be good if this example design was small enough to quickly run through the tools but still complex enough to showcase several of the features that users might want to use in their own designs.

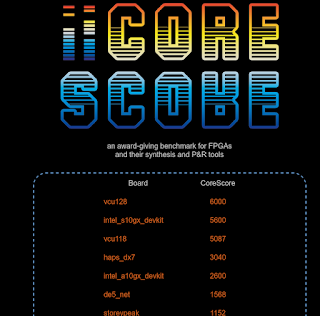

Well, what better design could exist than a small SoC based on the award-winning SERV, the world's smallest RISC-V CPU? This tutorial will show we can take an existing building block, in this case SERV, turn it into an ASIC-friendly SoC, run it in simulation, make an FPGA prototype and finally have it manufactured as an ASIC. All using FuseSoC and Edalize to hide most of the differences between these vastly different tool environments. Afterwards, you should be able to use the same process and thinking to turn your own designs into FuseSoC packages that can be used with different tools and easily reused in your own and other people's designs.

|

From design to simulation to FPGA to ASIC. FuseSoC+Edalize helps you all the way

|

Let's start by looking at what we can do to make an ASIC-friendly SoC out of our good friend SERV.

Creating an ASIC-friendly SoC

For FPGA implementations, there is a reference design for SERV called the Servant SoC. That one is unfortunately not so well suited for ASIC implementation for two reasons. The main one being that it relies on the data/instruction memory being preinitialized with an application that we can run, which is not something we can easily support in an ASIC. The other thing is also memory-related. The Servant SoC uses a separate memory for RF and for instruction+data but SERV supports using a a single shared memory for that, which will allow for an even smaller footprint.

|

Servant SoC - the reference platform for running SERV on FPGA

|

So with these things taken into consideration, we look at how to design our SoC called Subservient. An obvious inspiration for this is the Serving SoClet which uses this aformentioned shared memory setup. For the subservient SoC however we need to move the actual RAM macro out of the innards of the design so that we can instead connect the RAM and the subservient SoC as hard macros. Related to this we also introduce a debug interface that we can use to write to the memory while SERV is held in reset since we can't rely on preinitialized memory content.

|

The Serving SoClet. A building block for making tiny SoCs. Just add peripherals.

|

We can reuse the arbiter and mux from the serving SoClet as is (reuse is good!), but use a slightly modified version of the RAM IF that basically just introduces a Read Enable for the RAM. The debug interface is just a dumb mux that assumes there aren't any memory accesses in flight when the switch is toggled. The RAM interface module that turns the internal 32-bit accesses to 8-bit accesses towards the RAM is the only moderately complex addition, and this is a great thing! It means that we have been able to reuse most of the code and have less untested code to deal with. The resulting architecture looks like this.

|

The core of the Subservient SoC. Made for portable ASIC implementation. Needs RAM, peripherals and a way to initialize the instruction/data memory

|

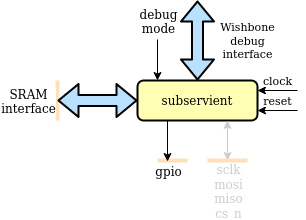

The subservient_core exposes a Wishbone interface where we can hook up peripheral devices. Since we want the simplest thing possible, we just terminate the peripheral bus in a 1-bit GPIO controller and make a wrapper. The greyed out blocks are potential future additions.

|

The simplest I/O configuration for Subservient. Just a single output pin

|

And for someone looking at it from the outside, it looks like this.

Now that we have a design we want to do some testing. For this, we create a testbench that contains a SoC hooked up to a model of the OpenRAM macro that we intend to use in the SkyWater 130nm OpenMPW tapeout and a UART decoder so that we can use our GPIO as a UART. We also add some hastily written lines of code to read a Verilog hex file and write the contents to memory through the debug interface before releasing the SoC reset. This task would be handled by the Caravel harness in the real OpenMPW setup.

|

Subservient testbench. Starts by loading a program to the simulated RAM through the debug interface and then hand over to SERV to run.

|

Adding FuseSoC support

We are almost ready to run some simulations with FuseSoC. The last thing remaining is just to write the core description file so that FuseSoC knows how to use the core. Once you have a core description file, you will be able to easily use it with almost any EDA tool as we will soon see. Having a core description file is also an excellent way to make it easier for others to use your core, or conversely, pull in other peoples cores in your design.

We begin with the CAPI2 boilerplate.

CAPI=2:

name : ::subservient:0.1.0

Next up we create filesets for the RTL. Filesets are logical groups of the files that build up your design. You can have a single fileset for your whole design or a fileset each for your files. The most practical way is often to have a fileset for the core RTL, one for each testbench, and separate ones for files that are specific for implementation on a certain FPGA board etc. This is also what we will do here.

Filesets is also where you specify dependencies on other cores. In our case, subservient_core instantiates components from the serving core (or package to use the software equivalent of a core) so we add a dependency on serving here. The serving core in turn depends on the serv core. This means we don't have to care about the internals of either serving or SERV. Their respective core description files take care of the details for us. And if some larger project would want to depend on the subservient SoC, the core description file we are about to write will take care of that complexity for them.

The testbench fileset uses an SRAM model available from a core called sky130_sram_macros and also our trusty testbench utility core, vlog_tb_utils. Finally we add a couple of test programs in Verilog hex format.

filesets:

core:

files:

- rtl/subservient_rf_ram_if.v

- rtl/subservient_ram.v

- rtl/subservient_debug_switch.v

- rtl/subservient_core.v

file_type : verilogSource

depend : [serving]

mem_files:

files:

- sw/blinky.hex : {copyto : blinky.hex}

- sw/hello.hex : {copyto : hello.hex}

file_type : user

tb:

files:

- tb/uart_decoder.v

- tb/subservient_tb.v

file_type : verilogSource

depend : [sky130_sram_macros, vlog_tb_utils]

soc:

files:

- rtl/subservient_gpio.v

- rtl/subservient.v

file_type : verilogSource

We then define the user-settable parameters to allow us to easily change test program from the command-line, experiment with memory sizes and decide whether the GPIO pin should be treated as a UART or a regular I/O pin.

parameters:

firmware:

datatype : file

description : Preload RAM with a hex file at runtime

paramtype : plusarg

memsize:

datatype : int

default : 1024

description : Memory size in bytes for RAM (default 1kiB)

paramtype : vlogparam

uart_baudrate:

datatype : int

description : Treat gpio output as an UART with the specified baudrate (0 or omitted parameter disables UART decoding)

paramtype : plusarg

Finally we bind it all together by creating a simulation target. Targets in the core description files are end products or use-cases of the design. In this case, we define a target so that we can run the design within a testbench in a simulator. The targets is also where we reference the filesets and parameters that were defined earlier. This allows us to use different subsets of the core for different targets. We also throw in a derivative sim_hello target as a shortcut to run the other test program, a default target and a lint target so that we can get quick feedback on any potential design mistakes.

targets:

default:

filesets : [soc, core]

lint:

default_tool : verilator

filesets : [core, soc]

tools:

verilator:

mode : lint-only

toplevel : subservient

sim: &sim

default_tool: icarus

filesets : [mem_files, core, soc, tb]

parameters :

- firmware

- memsize

- uart_baudrate

toplevel : subservient_tb

sim_hello:

<<: *sim

parameters :

- firmware=hello.hex

- memsize=1024

- uart_baudrate=115200

With this in place we can now run

$ fusesoc run --target=sim_hello subservient

which will run the testbench with the hello.hex program loaded and the GPIO output interpreted as a UART with 115200 baud rate. The output should eventually look something like this.

|

Running our first FuseSoC target on Subservient

|

We can run any other program like this, for example the blinky example which toggles the GPIO pin on and off, by supplying the path to a verilog hex file containing a binary

$ fusesoc run --target=sim subservient --firmware=path/to/subservient/sw/blinky.hex

We won't go into detail on how to prepare a Verilog hex file, but there's a Makefile in the subservient sw directory with some rules for to convert an elf to a hex file.

And as usual, you can list all targets with

$ fusesoc core show subservient

and get help about all available options for a specific target by running

$ fusesoc run --target=<target> subservient --help

Prototyping on FPGA

All right then. Simulations are nice and all, but wouldn't it also be good to have this thing running on a real FPGA as well? Yes, but let's save ourselves a bit of work. In the simulation we could load a file through the debug interface from our testbench. In the ASIC version, that task will be handled by someone else. But to avoid having to implement an external firmware loader in Verilog for the FPGA case, we use the FPGA's capability of initializing the memories during synthesis instead. Remember, always cheat if you have the option!

What board we want to use does not really matter. We can probably just take any random board we have at hand and add the proper pinout and clocking. I happened to have a Nexys A7 within arm's reach so let's go with that one.

We put the FPGA-specific clocking in a separate file so that we can easily switch it out if we want to run on a different board. Next up we add an FPGA-compatible SRAM implementation that supports preloading. We can steal most of the logic for the memory and clocking as well as a constraint file from the Servant SoC (Remember, always steal if you have the option!). Finally we add the subservient SoC itself, connect things together and put them in a new FPGA toplevel like this.

|

FPGA-friendly version for quick prototyping of our ASIC-friendly SoC

|

We're now ready to build an FPGA image but it's probably a good idea to run some quick simulations first to check we didn't do anything obviously stupid. All we need for that is a testbench, and since the Subservient FPGA SoC is very similar to the Servant SoC from the outside, we just take the Servant testbench and modify it slightly. We also put in a clock generation module that just forwards the clock and reset signals. With the code in place we add the necessary filesets

fpga:

files:

- rtl/subservient_generic_sram.v : {file_type : verilogSource}

- rtl/subservient_fpga.v : {file_type : verilogSource}

fpga_tb:

files:

- tb/subservient_fpga_clock_gen_sim.v : {file_type : verilogSource}

- tb/subservient_fpga_tb.cpp : {file_type : cppSource}

and the target for the fpga testbench

fpga_tb:

default_tool : verilator

filesets : [core, soc, mem_files, fpga, fpga_tb]

parameters: [firmware, uart_baudrate=46080]

tools:

verilator:

verilator_options : [-trace]

toplevel: subservient_fpga

to the core description file. Note that we're using a baud rate of 46080. That's because we define the testbench to run at 40MHz instead of 100MHz (for reasons that will become clear later) and then we must scale down that baud rate to 40% of 115200. Let's give it a shot by running

$ fusesoc run --target=fpga_tb subservient

|

Works in simulation! This increases our confidence in the FPGA implementation

|

Works like a charm. Now we have more confidence when going to FPGA.

There are a couple of things that differ between our simulation and an actual FPGA target. Instead of a testbench we need to add an FPGA-specific clocking module and a pin constraint file for our board. So let's put them in a new fileset and then create a target referencing these files and telling the EDA tool (Vivado) what FPGA we're targeting.

filesets:

...

nexys_a7:

files:

- data/nexys_a7.xdc : {file_type : xdc}

- rtl/subservient_nexys_a7_clock_gen.v : {file_type : verilogSource}

targets:

...

nexys_a7:

default_tool: vivado

filesets : [core, soc, mem_files, fpga, nexys_a7]

parameters: [memfile]

tools:

vivado: {part : xc7a100tcsg324-1}

toplevel: subservient_fpga

Voilà! Now we can run our FPGA build with

$ fusesoc run --target=nexys_a7 subservient

If everything goes according to plan and we have the board connected, it will be automatically programmed. Using our favorite terminal emulator and setting the correct baud rate should then give us the following output.

Wow! It's just like in the simulation...which is kind of the idea

Alright then, simulation and FPGA is all good, but our original idea was to put this in an ASIC. Sooo....how do we do that?

Making an ASIC target

The good news is that we have actually done most of the work already, and this is very much the point of FuseSoC and Edalize. It allows you to quickly retarget your designs for different tools and technologies without having to do a lot of tool-specific setup every time. Now, OpenLANE is a bit special compared to other EDA tool flows so

there will be a couple of extra bumps in the road, but hopefully these

will be smoothed out over time.

Since we have an Edalize backend for the OpenLANE toolchain already, all we need to to is to add any technology- and tool-specific files and invoke the right backend. OpenLANE can be operated in several different ways, but the way that Edalize integration currently works is by adding TCL files with OpenLANE configuration parameters that will be picked up by OpenLANE and then Edalize assumes it will find an executable called flow.tcl and that a usable PDK is installed and can be found by OpenLANE.

So on the tool configuration side, all we need to do is to add a TCL file containing the parameters we want to set. And the only things we are strictly required to put into this file is information about the default clock signal and target frequency.

set ::env(CLOCK_PERIOD) "25"

set ::env(CLOCK_PORT) "i_clk"

There are a million other parameters that can be set as well to control size, density and different routing strategies so I encourage everyone to read the OpenLANE docs and experiment a bit, but for this time we just add the aforementioned settings to a tcl file and add a fileset and target.

filesets:

...

openlane:

files:

- data/sky130.tcl : {file_type : tclSource}

targets:

...

sky130:

default_tool: openlane

filesets : [core, soc, openlane]

parameters :

- memsize

toplevel : subservient

Seriously, it's not harder than that. We're now ready to run OpenLANE and have our GDS file. The thing is, though, that it can be a bit finicky to install the toolchain and the PDK. Building the PDK from sources using the official instructions requires downloading gigabytes of Conda packages, keeping track of a number of git repositories and an somewhat convoluted build process. There are several disjointed attempts at providing a pre-built PDK but at the time of writing there didn't seem to be an agreement on how to do that. Also, the OpenLANE toolchain itself is a bit special in that the recommended way of running it is from a Docker image rather than install it directly. So, with these two facts at hand we decided to simply prepackage a Docker image with OpenLANE and a PDK included. This image gets updated from time to time, but in general it's a bit behind the upstream version. But that's totally fine. There's seldom any need for running the absolutely latest versions of everything.

Launcher scripts

But how then do we run OpenLANE from the Docker image? For that we use another one of Edalize's nifty features, launcher scripts! Normally, Edalize calls the EDA tools it wants to run directly but we can also tell Edalize to use a launcher script. A launcher scripts is a script (or any kind of program, really) that gets called instead of the EDA tools. The launcher script is also passed the original command-line as parameters so that it can make decisions based upon what Edalize intended to originally run.

In this case, Edalize wants to run flow.tcl -tag subservient -save -save_path . -design . when we invoke the OpenLANE backend but telling Edalize to use a custom launcher that we choose to call el_docker, the command-line instead becomes el_docker flow.tcl -tag subservient -save -save_path . -design .

If we just want the launcher script to do something special when OpenLANE is launched, then we simply check if the first argument is flow.tcl. In that case we do something special, or otherwise call the original command-line as usual. Simple as that.

So what special magic do we want to do for OpenLANE? We want to run flow.tcl from our OpenLANE+PDK Docker image and at the same time make our source and build tree available within the image. The whole command in its simplest forms looks something like this when invoked from the Edalize work root

$ docker run -v $(pwd)/..:/src -w /src/$(basename $(pwd)) edalize/openlane-sky130:v0.12 flow.tcl -tag subservient -save -save_path . -design .

We could make this script a bit nicer if we want so that we run as the ordinary user instead of as root, and so on, but this has in fact already been taken care of. The aforementioned el_docker launcher script already exists and is installed together with Edalize. And not only does it support running OpenLANE through Docker but also a whole bunch of other tools like verilator, icarus, yosys, nextpnr and so on. So you can just as well use this script for simulation and FPGA purposes if you for some reason don't want to natively install all these EDA tools. The proprietary tools are for obvious reasons not runnable this way since the EDA vendors would probably get very, very angry if we put their precious tools in containers and published them for everyone to be used. Hopefully we can completely avoid proprietary tools some day, but not yet. Anyway, so how do we tell Edalize to use a launcher script? Currently, this is done by setting the EDALIZE_LAUNCHER environment variable before launching FuseSoC (which launches Edalize).

So, our final command will be:

$ EDALIZE_LAUNCHER=el_docker fusesoc run --target=sky130 subservient

And with that, my friends, we have built a GDS file for the Subservient SoC that we can send to the fab and get real chips back. And this we did, but that's for another day. So let's just lean back and take in the beauty of the world's smallest RISC-V SoC, created by open source tools, and think a bit about how incredibly easy it was thanks to FuseSoC and Edalize (and of course NLNet who funded the Subservient SoC and integration of OpenLANE and Edalize).

And now, it's your turn to do the same with your own designs. Good luck!