Looks like it's time for a new Edalize release. During this development cycle, most of the work has been done under the hood with creating a new internal architecture and refactoring many of the backends. Most of those efforts will bear fruit longer term, but we can already today see the initial work on the flow API, that has been planned for at least two years. We also welcome a new backend for Lattice Nexus devices and some miscellaneous feature additions and bug fixes. Read on for the full story on what makes Edalize 0.3.0 the best Edalize (and likely the best EDA tool interfacing framework) ever!

Flow API

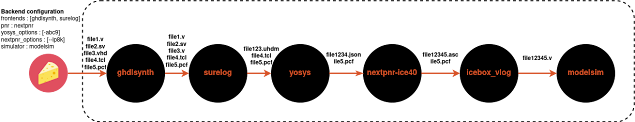

Edalize today has almost 30 backends. That's a lot of backends! Each of these backends map to a primary tool. The icarus backend runs Icarus Verilog. The quartus backend runs Quartus, the Vivado backend runs Vivado and so on. Ok, that's not strictly true. The Vivado backend can also optionally use Yosys for Synthesis. But still, Vivado is the primary tool here. But then we have the Icestorm, Trellis, Apicula backends that first run Yosys, then NextPNR (or in the case of Icestorm optionally Arachne-PnR) and then finally runs some target-specific bitstream generation tool which is what has provided the name for the backend. Even though much of the heavy lifting is done by yosys and nextpnr, it's still reasonable to name it after the distinguishing part.

|

| Current tool-centric Edalize backend with the configure, build and run stages |

But what if we want to do a timing simulation of a routed design for a Xilinx device using QuestaSim? In that case we want to run most of the Vivado toolchain before switching over to the simulator. We see both that the naming scheme is starting to fall apart and that the current architecture isn't capabale of doing this. And apart from the use cases that do work really well, we can also find plenty that don't. For example, look at the VUnit and (the currently proposed) Cocotb backends. Both these projects could be far better integrated with Edalize if the backends weren't considered a monolithic thing. The solution to this is the new flow API which allows arbitrary tools to hook up in a flow graph, using EDAM structures to pass information between them.

Separating the execution of the individual EDA tools and the execution of the flow graph into two distinct problems also allows future improvements such as using cloud orchestration tools or workload managers to direct the tool execution rather than a local Makefile, which is the case today. The new flow API also comes with a new abstraction layer for executing EDA tools also allow us to more consistently add custom launcher for our tools. This has already been used to great effect for seamlessly running a combination of dockerized and local tools.

There are still a lot of things that need to be properly documented, features to add and many of the existing backends still need to be ported over to the new flow API, but the good news is that an initial version of the flow API is shipping with Edalize 0.3.0, so you can try it out right away. And it has already brought some new features that weren't available before like using Surelog or sv2v as frontends to bring SystemVerilog support to tool flows that don't natively support that. Here's a quick example for how to build a blinky for the icestorm flow with the new API

from edalize.flows.icestorm import Icestorm edam = {} print("Adding files") files = [{'name' : 'blinky.v', 'file_type' : 'verilogSource'}] print("Setting parameters") parameters = { 'clk_freq_hz' : {'datatype' : 'int', 'default' : 1000000, 'paramtype' : 'vlogparam'}} print("Setting flow options") flow_options = { 'nextpnr_options' : ['--lp8k', '--package', 'cm81', '--freq', '32']} print("Creating EDAM structure") edam = { 'name' : 'blinky', 'files' : files, 'toplevel' : 'blinky', 'parameters' : parameters, 'flow_options' : flow_options, } print("\nInstantiating the Icestorm class with current dir as build directory") icestorm = Icestorm(edam, '.') print("\nconfigure writes the Icestorm configuration files but doesn't run any of the tools") icestorm.configure() print("Now we run the EDA tools") #This needs the actual EDA tools and the blinky verilog file (https://github.com/fusesoc/blinky/blob/master/blinky.v) icestorm.build()

Or just inspect which options are available for a specific flow

from edalize.flows.icestorm import Icestorm print("\nAvailable options for the icestorm flow") print("=======================================\n") for k,v in Icestorm.get_flow_options().items(): print(f"{k} : {v['desc']}")

A slightly more involved example can also be found here

As for the next step, FuseSoC will be updated to take advantage of the new flow API

prjoxide backend

A new family of Lattice devices called Nexus have been documented under the name project Oxide. This has resulted in a new yosys->nextpnr->bitstream generation flow, which now has a corresponding Edalize backend.

Docker launcher script

The Edalize backends are rapidly gaining support for using a custom launcher by setting the EDALIZE_LAUNCHER environment variable before running Edalize. This has been used to great effect already and serves many use-cases and will be the topic of a separate blog post some time in the future. But for now we will look at the major change for Edalize. As of this version, Edalize now ships with an extra script called el_docker, el meaning Edalize Launcher. Since running containerized versions of the open source EDA tools has been a frequent use case of the Edalize launcher mechanism, but required an external launcher script, I decided to ship this along with Edalize for now. So to use this, just set the environment variable EDALIZE_LAUNCHER=el_docker before Edalize gets called. Below is an example of first linting and then creating a GDSII file for SERV without a single local EDA tool. Pretty sweet, ain't it?

#Add SERV to the current workspace $ fusesoc library add serv https://github.com/olofk/serv #Set the launcher command export EDALIZE_LAUNCHER=el_docker #Run linting with Verilator fusesoc run --target=lint serv #Make GDSII file of SERV with OpenLANE and sky130 PDK fusesoc run --target=sky130 serv

Other things

Verilator typically generates an executable simulation that is subsequently run, but there are use cases where the model instead should be integrated in a larger system. For this reason, the verilator backend now has an exe option which can be set to false to stop before the final linking

This development cycle has also seen a lot of improvements on the CI side, reformatting the source code for consistency with a tool called black, support for newer Libero versions and as usual, a bunch of bug fixes

I hope you all enjoy this new version of Edalize. As always, there's plenty of things going on and we would love some help, so if you want to get involved you are most welcome to join the chat at gitter.im/librecores/edalize or look through the code, issues or PRs at https://github.com/olofk/edalize